Department of Computer Science and Computer Engineering

504 J. B. Hunt Building

1 University of Arkansas

Fayetteville, AR 72701

Phone: (479) 575-6197

Fax: (479)-575-5339

Dr. Bobda Awarded NSF Grant

The National Science Foundation (NSF) has awarded Dr. Christophe Bobda, Professor

of Computer Engineering $477,870.00 to conduct research in Reconfigurable In-Sensor

Architectures for High Speed and Low Power In-situ Image Analysis. Cameras are pervasively

used for surveillance and monitoring applications and can capture a substantial amount

of image data.

The National Science Foundation (NSF) has awarded Dr. Christophe Bobda, Professor

of Computer Engineering $477,870.00 to conduct research in Reconfigurable In-Sensor

Architectures for High Speed and Low Power In-situ Image Analysis. Cameras are pervasively

used for surveillance and monitoring applications and can capture a substantial amount

of image data.

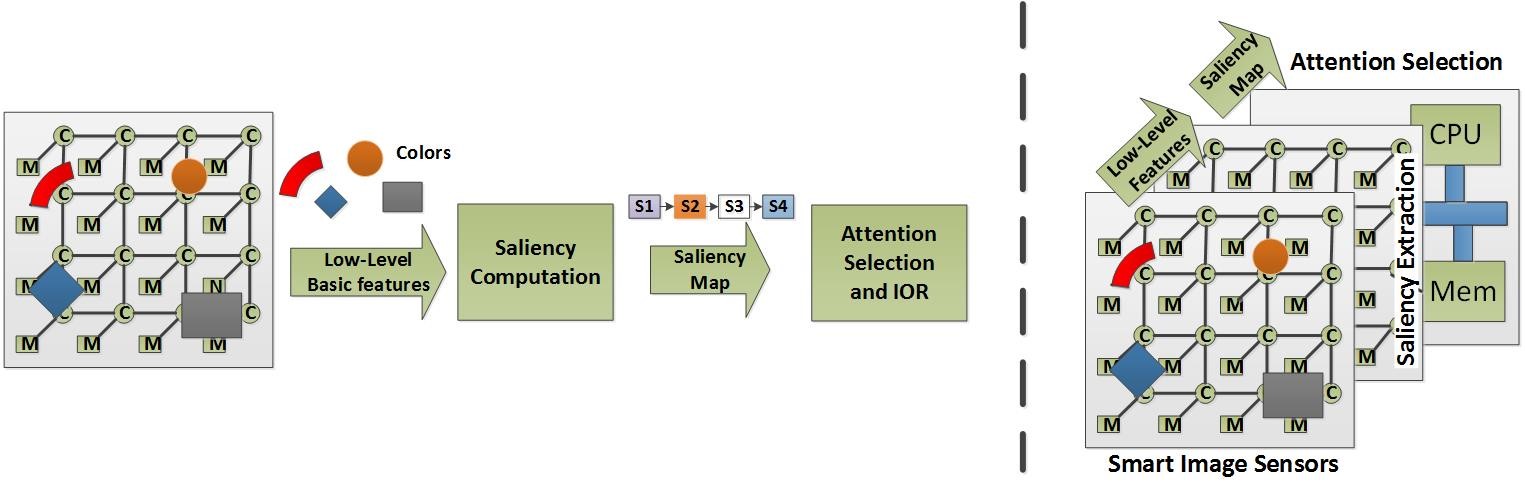

The processing of this data, however, is either performed post-priori or at powerful backend servers. While post-priori and non-real-time video analysis may be sufficient for certain groups of applications, it does not suffice for applications such as autonomous navigation in complex environments, or hyper spectral image analysis using cameras on drones, that require near real-time video and image analysis, sometimes under SWAP (Size Weight and Power) constraints. Future big data challenges in real-time imaging can be overcome by pushing computation into the image sensor. The resulting systems will exploit the massive parallel nature of sensor arrays to reduce the amount of data analyzed at the processing unit.

To overcome the limitations of existing architectures, the goal of this research is the design of a highly parallel, hierarchical, reconfigurable and vertically-integrated 3D sensing-computing architecture, along with high-level synthesis methods for real-time, low-power video analysis. The architecture is composed of hierarchical intertwined planes, each of which consists of computational units called. The lowest-level plane processes pixels in parallel to determine low level shapes in an image while higher-level planes use outputs from low-level planes to infer global features in the image.

The proposed architecture presents three novel contributions: a hierarchical, configurable architecture for parallel feature extraction in video streams, a machine learning based relevance-feedback method that adapts computational performance and resource usage to input data relevance, and a framework for converting sequential image processing algorithms to multiple layers of parallel computational processing units in the sensor.

The results of this projects can be used in other fields, where large amounts of processing need to be performed on data collected by generic sensors deployed in the field. Furthermore, mechanisms for translating sequential constructs into functionally equivalent accelerators using hardware constructs will lead to highly parallel and efficient sensing units that can perform domain specific tasks more efficiently.

October 2016